$ ./mem-free-release-test.pl --all

Name:

mem-free-release-test.pl - example script to test and demonstrate how

and when memory is being released back to the system

Usage:

./mem-free-release-test.pl --all

./mem-free-release-test.pl --test 10

--test X execute one test only, default 1

--all execute all X+ tests

Description:

This script includes 1 to X memory allocation test cases. These cases

can be executed per one using "--test X" or all sequentially using

"--all" switches.

Each test will perform a code inside a block and print out memory usage

within and after leaving the block scope → when the local variable

memory is garbage collected and freed.

Cases where the allocated memory inside and outside the block are

(nearly) the same are not memory leaks, memory is returned back for

further usage, but only inside that given process. Cases where the

allocated memory decreases outside the block means that the memory was

freed and returned back to the operating system. See below link to

stackoverflow question 2215259 about malloc() and returning memory back

to the OS.

(Will malloc implementations return free-ed memory back to the system?

<

https://stackoverflow.com/questions/2215259>)

Long story short → M_TRIM_THRESHOLD in Linux is by default set to 128K,

only memory blocks of 128K size are returned back to the OS.

This does not help a lot with huge number of small data strictures

arrays-of-hashes-of-hashes, unless a small trick is used.

--------------------------------------------------------------------------------

Perl execution of this script

just do nothing....

done test case 1

allocated memory size: 9.90M

--------------------------------------------------------------------------------

create 100mio character big scalar using 100 character chunks

code: $t .= "a"x100 for 1..1_000_000;

length($t): 100000000

allocated memory size: 116.96M

done test case 2

allocated memory size: 116.96M

--------------------------------------------------------------------------------

create 100mio character big scalar using "x" constructor

code: $t .= "." x 100_000_000;

length($t): 100000000

allocated memory size: 200.65M

done test case 3

allocated memory size: 105.28M

--------------------------------------------------------------------------------

create array with 1_000 scalars, each little less then 128kB

code: push(@ta, "." x (128*1024-14)) for (1..1_000);

scalar(@ta): 1000

allocated memory size: 135.09M

done test case 4

allocated memory size: 135.09M

--------------------------------------------------------------------------------

create array with 1_000 scalars, each 128kB big

code: push(@ta, "." x (128*1024-13)) for (1..1_000);

scalar(@ta): 1000

allocated memory size: 138.95M

done test case 5

allocated memory size: 9.91M

--------------------------------------------------------------------------------

create hash with 1_000_000 scalars each 100 characters big

code: $th{"k".$key_id++} = "x".100 for (1..1_000_000);

scalar(keys %th): 1000000

allocated memory size: 108.93M

done test case 6

allocated memory size: 108.93M

--------------------------------------------------------------------------------

create 2x hashes with 1_000_000 scalars 100 characters big

code: for (1..1_000_000) { $th{"k".$key_id++} = "x".100; $th2{"k".$key_id++} = "x".100 }

scalar(keys %th): 1000000

scalar(keys %th2): 1000000

allocated memory size: 209.38M

done test case 7

allocated memory size: 209.51M

--------------------------------------------------------------------------------

create 2x hashes with 1_000_000 scalars 100 characters big, but with same set of keys

(Perl hash keys have shared storage in Perl, that is why less memory is used)

code: for (1..1_000_000) { $th{"k".$key_id} = "x".100; $th2{"k".$key_id++} = "x".100 }

scalar(keys %th): 1000000

scalar(keys %th2): 1000000

allocated memory size: 167.23M

done test case 8

allocated memory size: 167.36M

--------------------------------------------------------------------------------

create 2x hashes with 1_000_000 scalars (via two 1_000 loops) 100 characters big

code: for (1..1_000) { for (1..1_000) { $th{"k".$key_id++} = "x".100; $th2{"k".$key_id++} = "x".100 } }

scalar(keys %th): 1000000

scalar(keys %th2): 1000000

allocated memory size: 209.38M

done test case 9

allocated memory size: 209.51M

--------------------------------------------------------------------------------

create array with serialized 2x hashes with 1_000_000 scalars (via two 1_000 loops) 100 characters big

(serialization functions are also doing compression)

code: for (1..1_000) { for (1..1_000) { $th{"k".$key_id++} = "x".100; $th2{"k".$key_id++} = "x".100 }; push(@ta, freeze(\%th), freeze(\%th2)); (%th, %th2) = (); }

scalar(keys %th): 0

scalar(keys %th2): 0

scalar(@ta): 2000

length($ta[-1]): 18020

thaw($ta[-1]): HASH(0x861ad64)

sum(map {scalar(keys(%{thaw($_)}))} @ta): 2000000

allocated memory size: 44.55M

done test case 10

allocated memory size: 42.57M

--------------------------------------------------------------------------------

create array with serialized 2x hashes with 1_000_000 scalars (via two 500x2000 loop) 100 random characters big

(random characters there so that the serialization is not able to compress this data)

code: for (1..500) { for (1..2_000) { $key_id++; $th{"k".$key_id} .= chr(rand(256)) for 1..100; $th2{"k".$key_id} .= chr(rand(256)) for 1..100 }; push(@ta, freeze(\%th), freeze(\%th2)); (%th, %th2) = (); }

scalar(keys %th): 0

scalar(keys %th2): 0

scalar(@ta): 1000

length($ta[-1]): 228020

thaw($ta[-1]): HASH(0x85f1654)

sum(map {scalar(keys(%{thaw($_)}))} @ta): 2000000

allocated memory size: 229.71M

done test case 11

allocated memory size: 10.96M

--------------------------------------------------------------------------------

Tips for Less Memory Footprint:

read the Rules Of Optimization Club

<

http://wiki.c2.com/?RulesOfOptimizationClub>

just add more memory

Unless that code is taking gigabytes of memory, don't worry, memory

is cheap and plentiful these days, or?

have a good coffee and good monitoring at your side

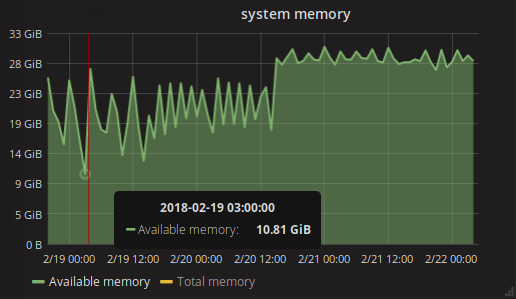

Chart the (virtual) server memory free over long period to spot

extremes and to see effects of your memory optimisations.

terminate and restart

The simplest way to release memory is to terminate the process,

possibly with status saved, and then start it again right away.

SystemD and daemontools understands this concept and can be

configured for continuous restarts.

work in chunks if possible

Query database using cursors, 1_000 rows at a time, process it and

then do the next.

If you need to load 1_000_000 data hashes, split them into chunks

that can be serialized. With or without compression freezed data

structures takes much less memory. Memory of those block, when

greater then 128k, will be returned back to the system.

fork before memory intensive operation

If the processed data just needs to be saved to disk, or

transferred, or post processed once all in-memory you can fork off a

child process to do this job. Will not only make that tasks run in

parallel, but also all the extra memory allocated inside the child

process will be cleared by process termination.

see Also:

Linux::Smaps - a Perl interface to /proc/PID/smaps

<

https://metacpan.org/pod/Linux::Smaps>

Sereal - Fast, compact, powerful binary (de-)serialization

<

https://metacpan.org/pod/Sereal>

Parallel::ForkManager - A simple parallel processing fork manager

<

https://metacpan.org/pod/Parallel::ForkManager>

--------------------------------------------------------------------------------